Use Cases

Explore the project experiences of our team across industries and areas of expertise!

-

Pharma & Biotech

-

Healthcare

-

Manufacturing

-

Financial

-

Real Estate

-

DevOps & IT

-

Customer Service

-

Recommender System

-

MLOps

-

Other

Manufacturing Process Optimization with Advanced Data Analytics

big pharma optimization data science data engineeringProblem

Drug manufacturing processes are extremely complex. We worked as part of a global Center of Excellence team supporting different use cases to better understand the processes, optimize them (i.e., increase yield), and improve the handling of unplanned events during manufacturing.

Solution

We collaborated with various internal stakeholders, including business customers, product managers, IT teams, data scientists, and drug manufacturing experts, to facilitate the analysis of requirements, development of hypotheses, and creation of robust data pipelines for end-to-end Machine Learning.

Benefits

The benefits that we bring to this domain are a better understanding of the manufacturing processes and optimization of both the efficiency of the overall process and the handling of unexpected events.

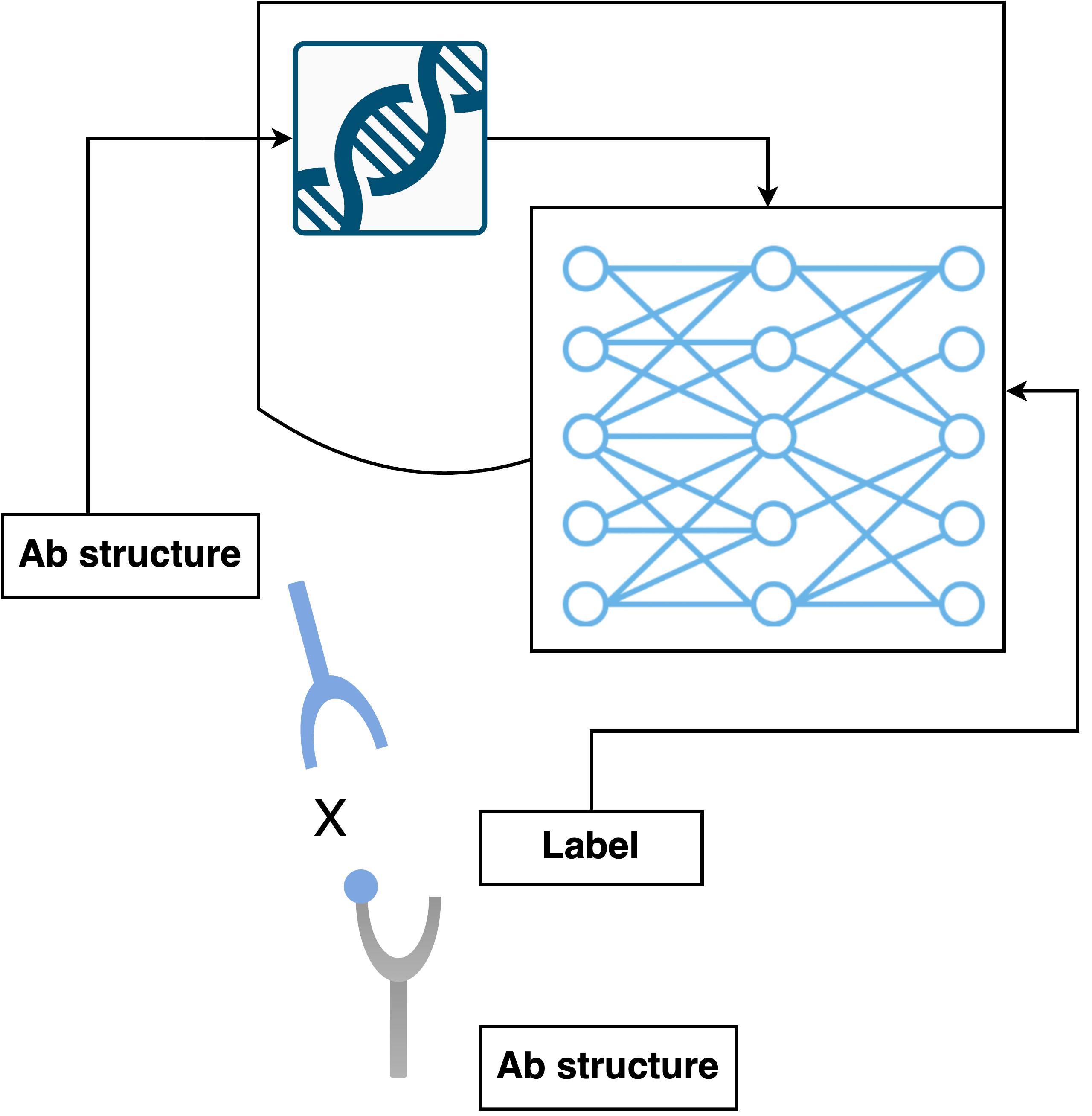

Decoding the Complexities of Protein Structures in Drug Discovery with Deep Learning

biotech drug discovery deep learning model development interpretabilityProblem

Proteins, as highly complex and fragile molecular machines, play a crucial role in all biological processes in living organisms, including humans. With a vast number of over 200 million known proteins, each possessing a distinctive 3D structure dictating its function, comprehending the structure of proteins is critical for advancing medical research and drug development. Nonetheless, the high cost and time required for determining protein structures restrict our capability to explore the majority of known proteins, and unraveling the mysteries of these proteins could potentially revolutionize our comprehension of life and expedite the discovery of novel disease treatments.

Solution

The implementation of a deep neural network helps in the understanding of protein structures by predicting and modeling their 3D structures. Deep learning algorithms can be trained on large sets of data to recognize patterns and relationships between amino acid sequences and protein structures. This enables the creation of accurate and reliable models of protein structures, which can be used in drug discovery and other medical research applications. Additionally, neural networks can also aid in the identification of potential drug targets and the development of new therapies.

Benefits

Successfully implemented a neural network that can aid in identifying novel drug targets, designing new drug molecules, and optimizing existing drug molecules to improve their effectiveness. This helps also companies to develop new drugs faster and with a higher success rate, potentially leading to increased revenue and market share. Overall, leveraging neural networks to understand protein structures can offer a significant competitive advantage to companies involved in drug discovery.

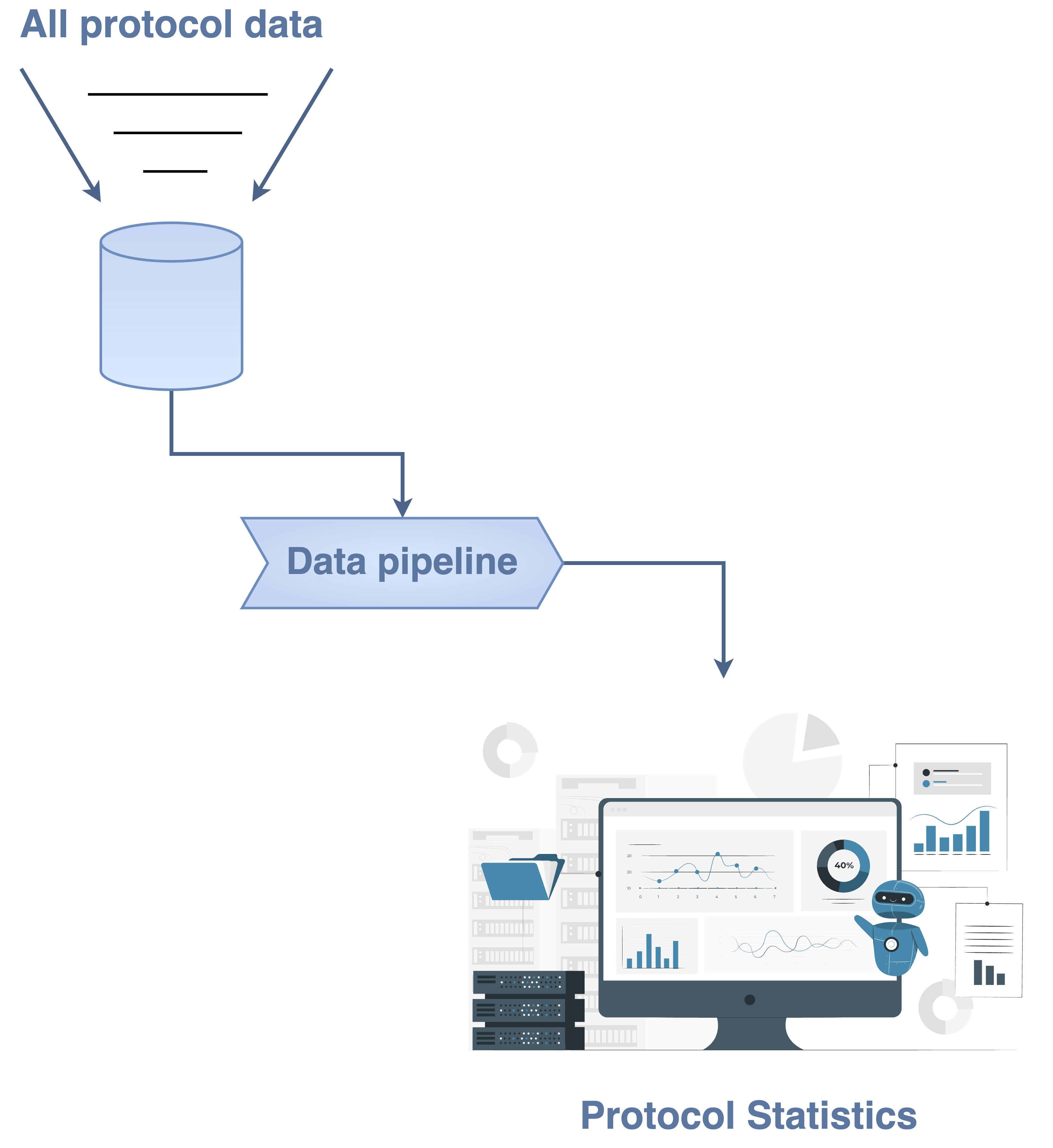

Protocol Data Analysis

protocol data data pipeline data analysis data engineeringProblem

Many scientists in life sciences face difficulties in analyzing data stored in a data warehouse and review their calculated readout definitions because of the way that all the aggregations per protocol conditions have been calculated. Data engineers apply the best practices which could be a touchstone of developing effective data pipelines with the objective to responsibly direct actions in data analysis.

Solution

Apply our effective approach to design, implement and maintain data pipelines in Cloud. Our approach can be adaptive to any kind of aggregations for different data protocol conditions.

Benefits

We bring value and experience in analyzing protocol data for health and pharmaceutical purposes, generating statistics, and extracting insights to address complex issues in life sciences.

Feasibility Study: Automated Sleep Diagnostics

healthcare sleep medicine time series feasibility studyProblem

Analyzing Polysomnography (PSG) data is the tedious yet necessary process to diagnose sleep disorders such as sleep apnea. Physicians spend hours annotating the patients' sleep phases and events considering their EEG and other physiological measurements simultaneously.

Solution

In collaboration with two hospitals, we performed a feasibility study to develop an ML-based system that supports doctors in their PSG analysis. Following the first steps of the MLOps lifecycle (discovery and feasibility), we determined the doctor's expectations, assessed the quality of the data assets at hand, and devised a detailed plan for the development, deployment, and operation of the system.

Benefits

The pneumology department could rely on our expertise at the intersection of healthcare and machine learning which resulted in an efficient turnaround from data transfer to the report of findings and recommendations. Due to our experience with academic institutions, the clinical director benefitted from our technical in-depth contributions to the writing of grant proposals.

Analysis of Brain Activity

healthcare cardiology time series deep learning model development interpretabilityProblem

Major depression and other psychiatric diseases are affecting an enormous number of people. In order to improve diseases detection and treatment selection a better understanding of underlying mechanisms is needed.

Solution

We worked together with many collaborators from different fields, including medicine, psychiatry, machine learning, physics, and neuroscience. Using neuro-imaging and state-of-the-art statistical techniques, we analysed the relationships between behaviour, brain activity, and disease. Machine learning models were trained to distinguish between patients and healthy controls.

Benefits

Health care professionals, researchers and other stakeholders could rely on us to manage and analyze large amounts of data and provide detailed reports describing important outcomes. Automatic classification of disease opens the door to personalized healthcare. Findings were published in a high impact journal.

Automatic Monitoring of Movement Abnormalities

machine learning time series deep learning model development interpretabilityProblem

Movement disorders are common but abnormalities are often difficult to detect and monitor. Some standardized assessments for the rating of disease severity exist, but they require substantial time commitments and subjective interpretations by health care professionals (HCPs).

Solution

Many assessments can be automated by recording videos of patients' movements and then applying computer-vision and machine learning techniques to (1) provide objective measurements of the movements and (2) automatically estimate the severity of any abnormalities.

Benefits

These automatic techniques can allow for initial assessments and regular monitoring of movements in patients' homes, without the involvement of HCPs. They can provide interpretable quantitative measurements so that disease trajectories can be tracked objectively. This can support HCPs and free up their time and allow them to focus on cases which need their immediate attention.

Manufacturing Process Optimization with Advanced Data Analytics

big pharma optimization data science data engineeringProblem

Drug manufacturing processes are extremely complex. We worked as part of a global Center of Excellence team supporting different use cases to better understand the processes, optimize them (i.e., increase yield), and improve the handling of unplanned events during manufacturing.

Solution

We collaborated with various internal stakeholders, including business customers, product managers, IT teams, data scientists, and drug manufacturing experts, to facilitate the analysis of requirements, development of hypotheses, and creation of robust data pipelines for end-to-end Machine Learning.

Benefits

The benefits that we bring to this domain are a better understanding of the manufacturing processes and optimization of both the efficiency of the overall process and the handling of unexpected events.

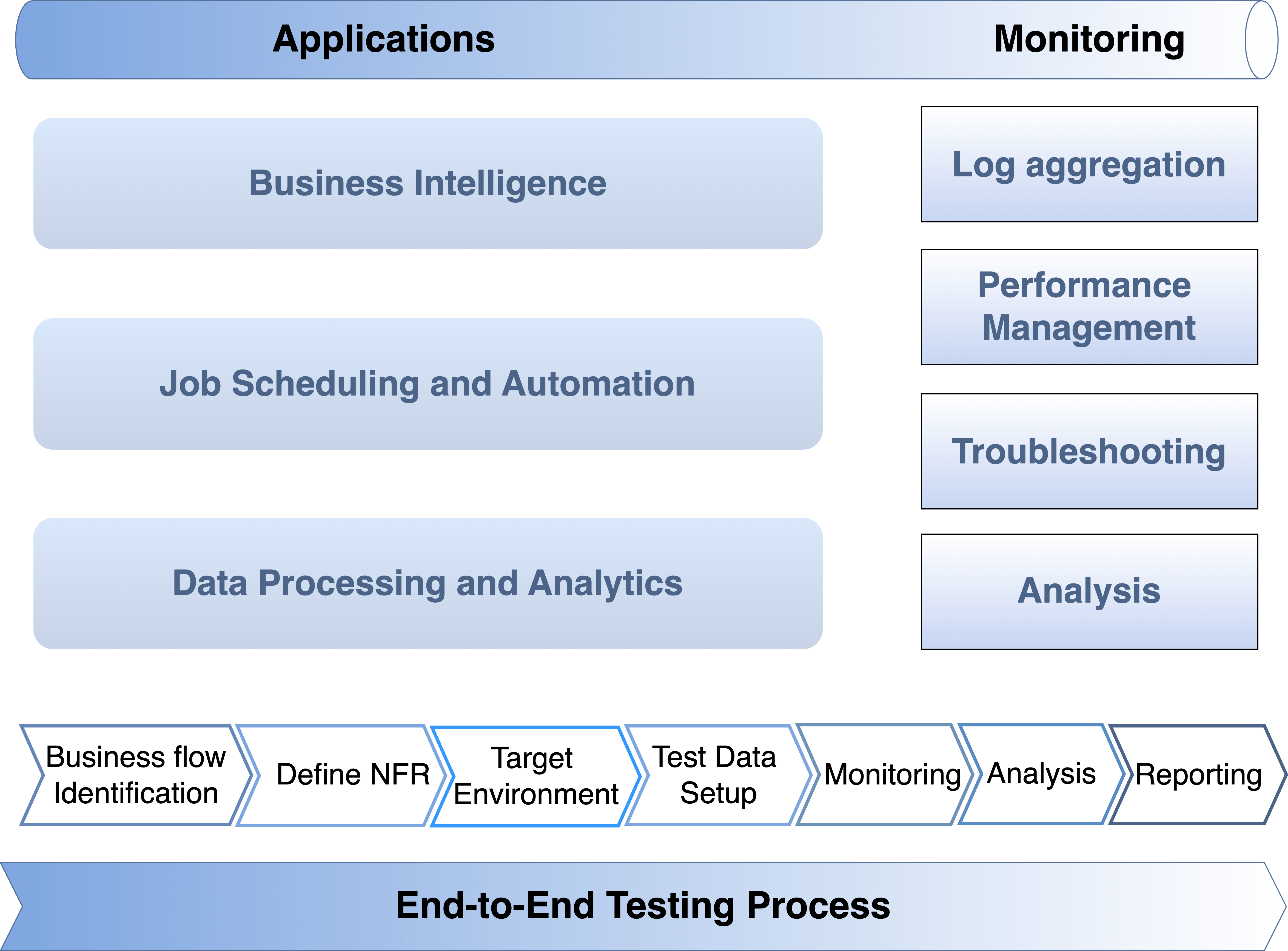

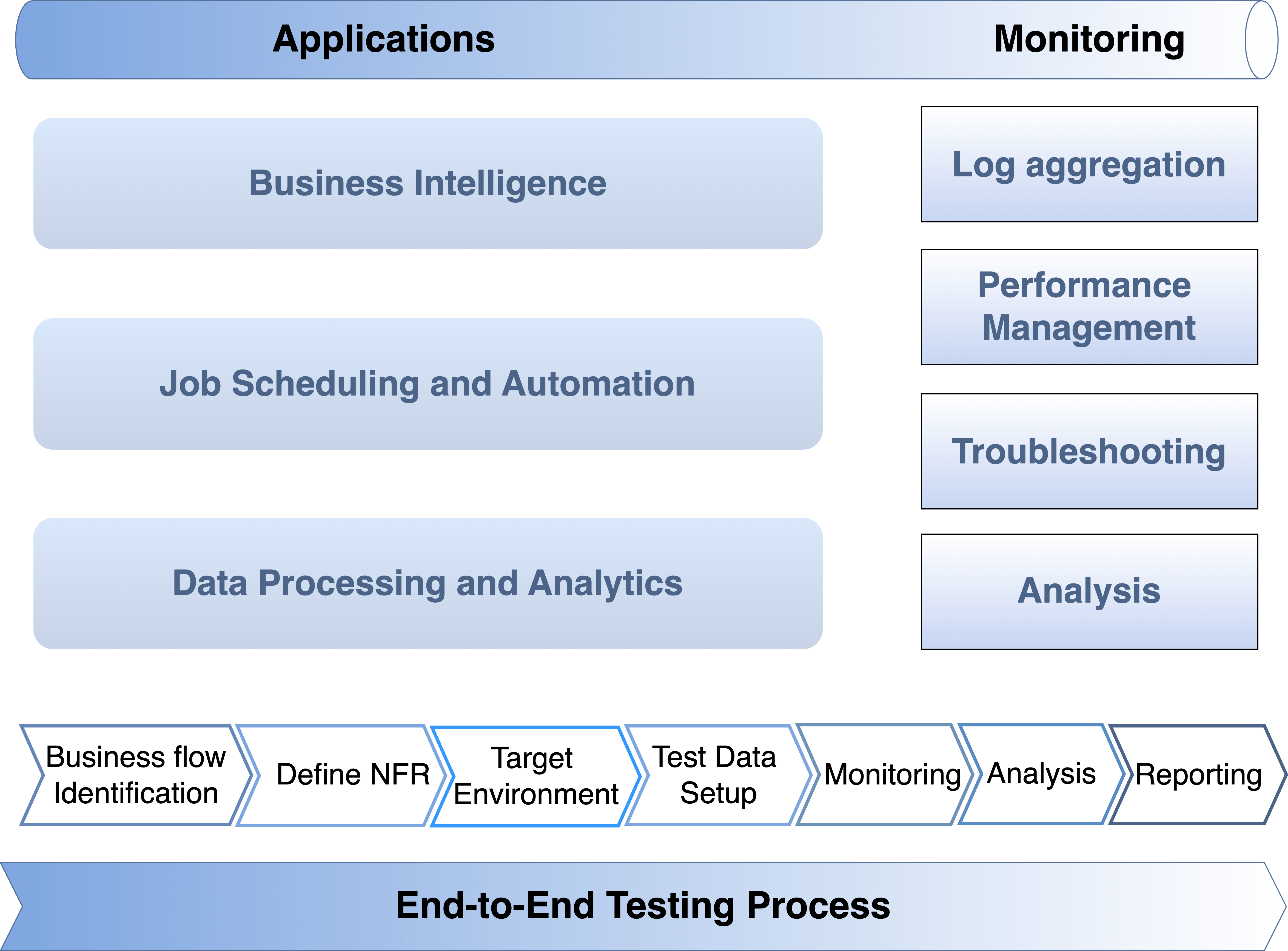

Data Analysis for Capital Reporting Platform Issues

data analysis machine learning reporting issues monitoring alertingProblem

The integration of a crucial capital reporting system posed performance challenges, endangering its successful implementation. This resulted in the system failing to meet important regulatory requirements related to its performance and stability.

Solution

A customized data analysis approach has been utilized to identify jobs that are taking longer to execute. Performance testing has been then established, and proactive monitoring and alerting were implemented.

Benefits

By identifying the critical path, troubleshooting efforts were focused on the most impactful areas to improve performance and stability issues. Additionally, the flow analysis was adjusted at a high level, and monitoring was implemented to facilitate in-depth analysis.

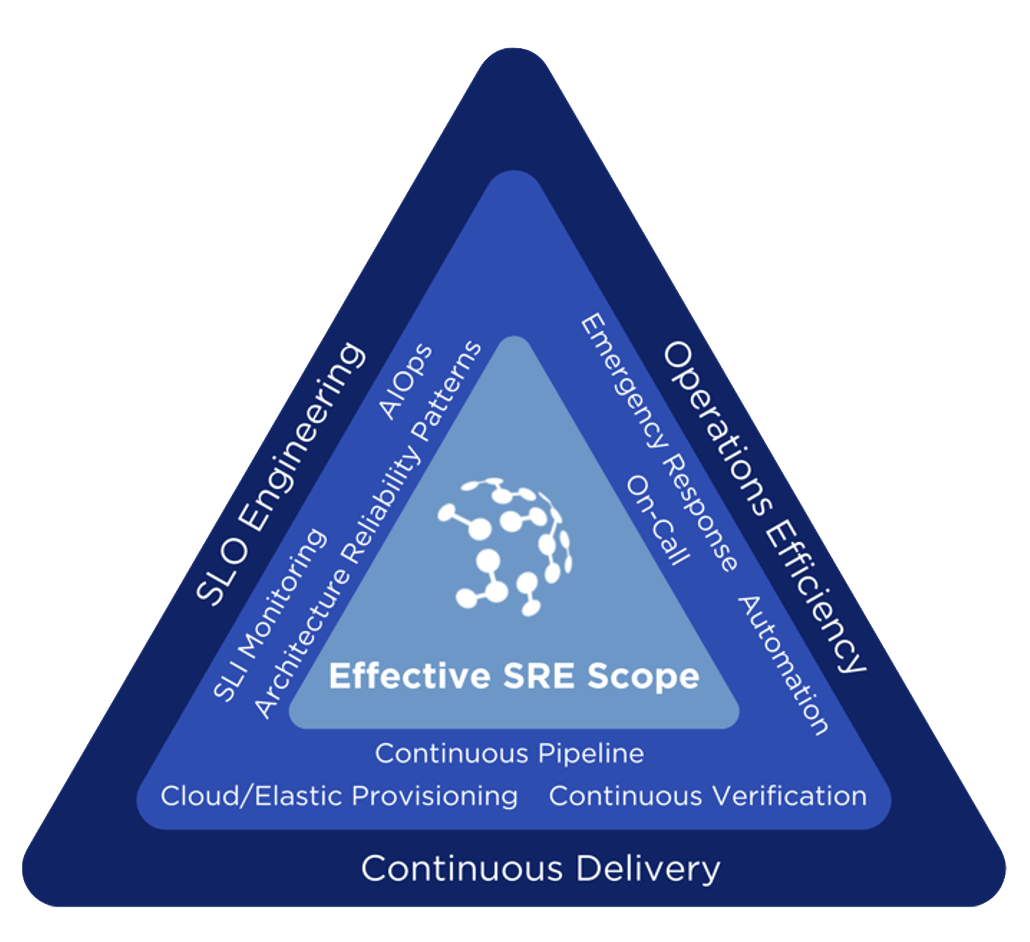

Reliability & Resilience Engineering Framework for a Global Bank in Zurich

reliability engineering SLO Engineering monitoring observability AIOpsProblem

In the banking industry there is a lack of clear definition of user expectations and service level objectives for critical banking applications. This can lead to confusion and dissatisfaction among users who may have different expectations. Moreover, there is a siloed approach to developing, testing, and operating applications without considering business expectations. This can result in applications that do not fully meet the needs of the business and its stakeholders. Finally, there is a missing insight into service level indicators, which can make it difficult to measure the effectiveness of the applications and improve them over time.

Solution

Our approach suggests assessing these applications to identify any reliability debts and formulating reliability stories to address them. This can help to improve the overall reliability of the applications. Moreover, our solution recommends building an SLO Engineering practice and offering services to identify SLI and SLOs of applications, which ensures that these applications meet the desired levels of reliability. In addition, our proposed framework suggests identifying gaps in observability, monitoring, and AIOps toolchain and defining strategic actions and an Observability Target Blueprint to close these gaps, which turns out to improve the overall observability of the applications and enable more effective monitoring. Finally, our solution creates a training curriculum and content, including mandatory training for Reliability Engineering, which ensures that the necessary skills and knowledge are in place to maintain and improve the reliability of critical banking applications over time.

Benefits

Established SRE as a critical capability including the definition of roles and responsibilities, the review of development templates and best practices, and internal training and service offerings (e.g. SLI/SLO Specification Process). This helped the customer to advance their DevOps and MLOps transformation.

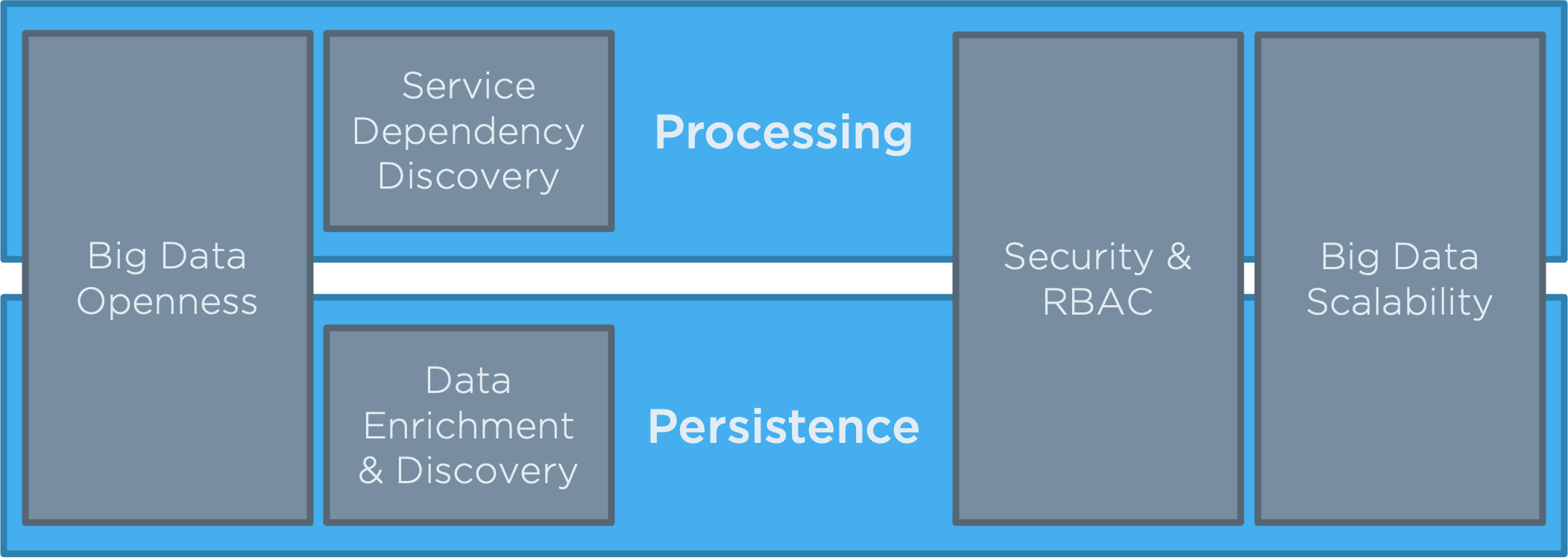

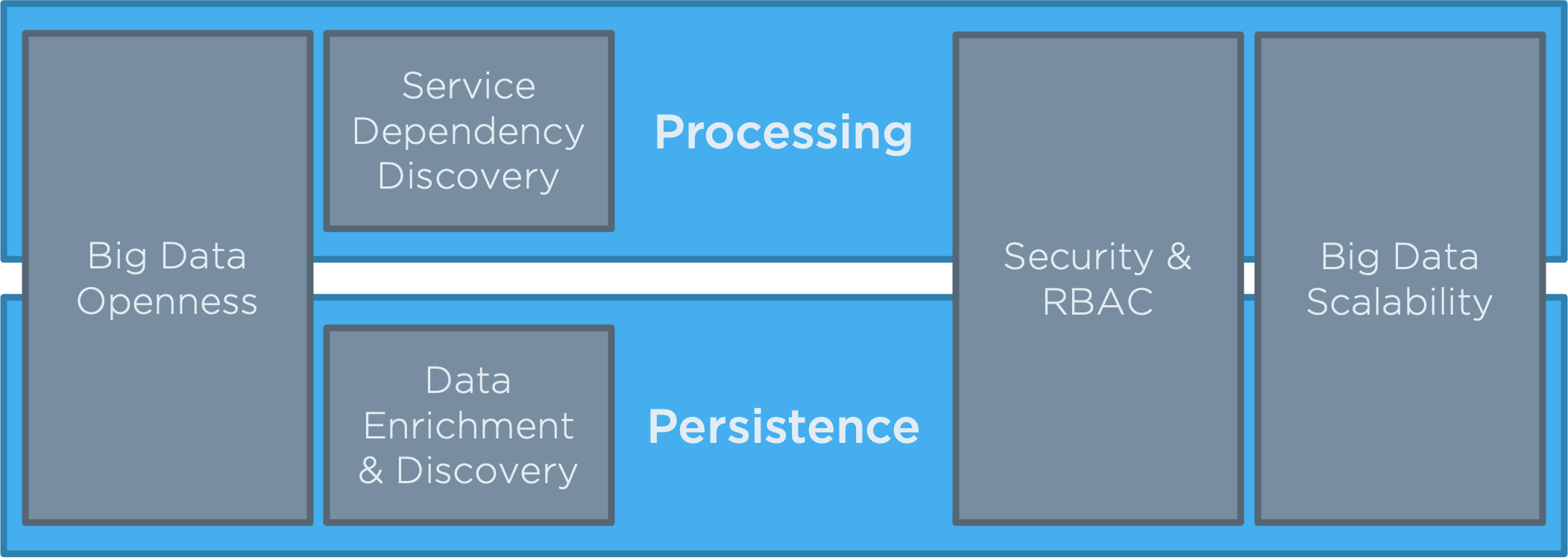

Observability for Big Data for Large Financial Institution

observability monitoring big data advanced analytics data managementProblem

Missing single source of truth for implementation of advanced analytical Observability use cases such as anomaly detection of key systems.

Solution

Design and implementation of centralized Big Data solution, on-boarding of various data sources and preparing data for analytical use-cases.

Benefits

De-siloed data management leading to a single source of truth for Observability data. Building the foundation for experimentation and implementation of use-cases for increased automation within the context of Monitoring and Observability.

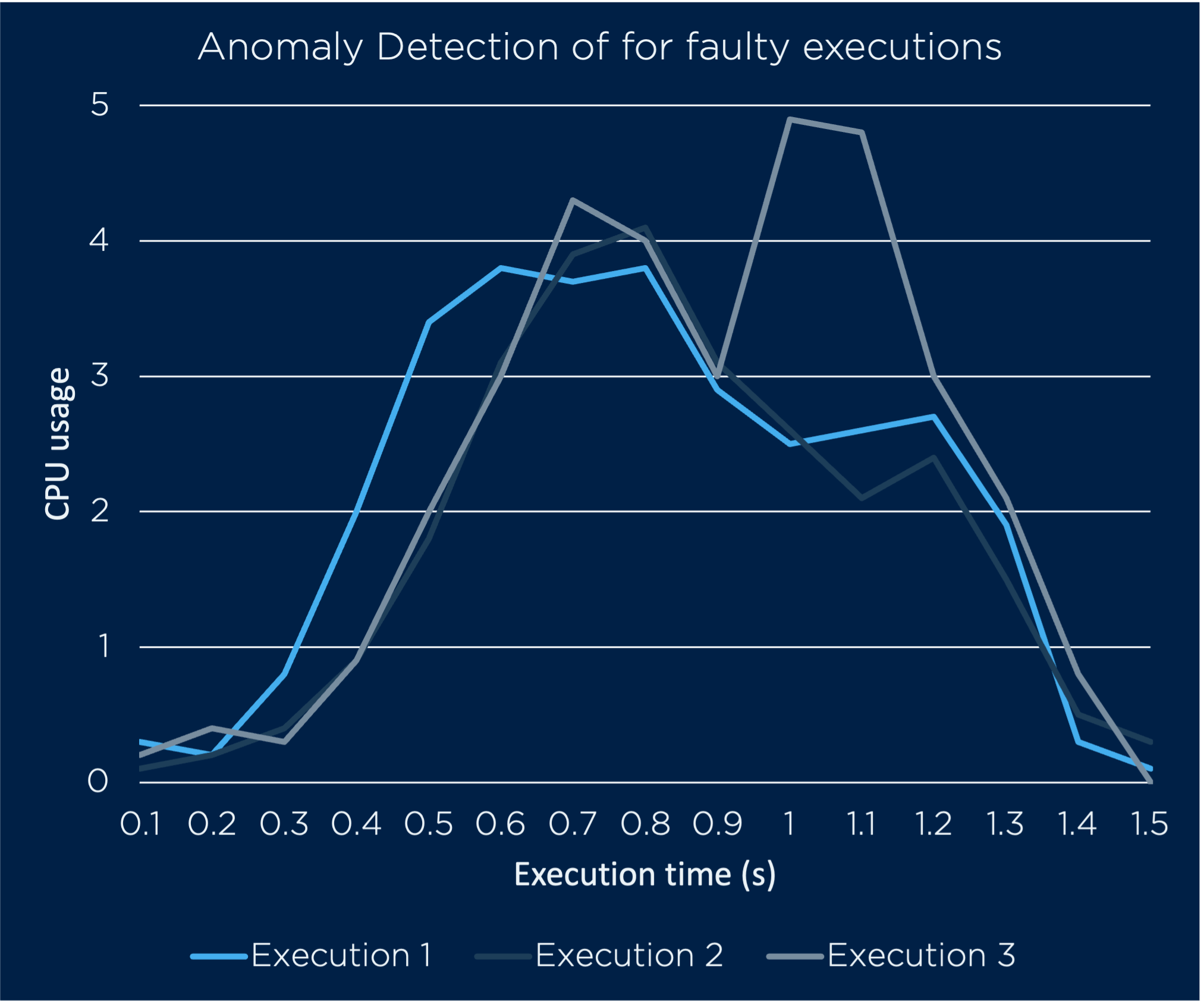

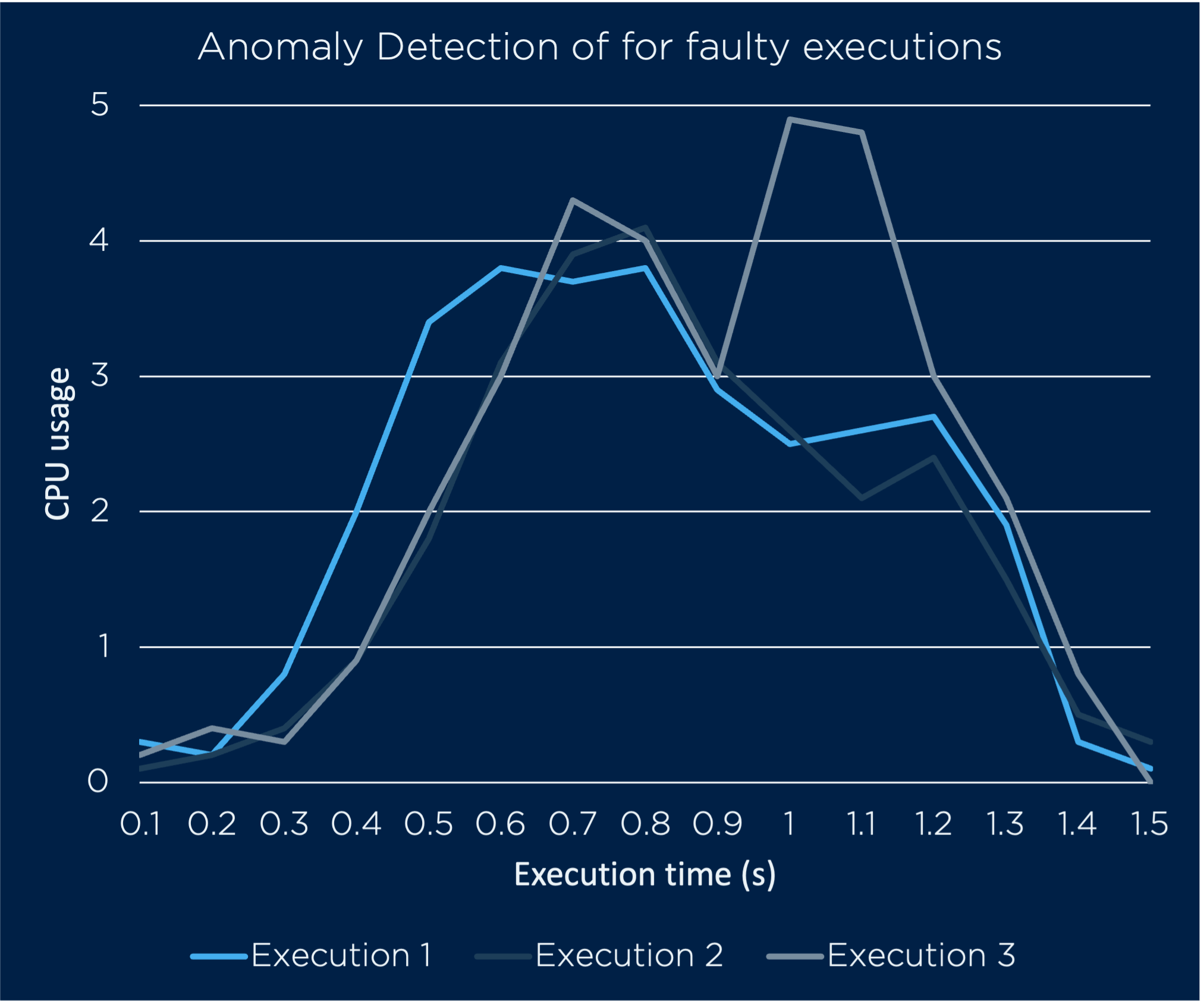

Machine Learning for Observability for Large Financial Institution

observability monitoring machine learning service level objectives reliability engineeringProblem

Reliability issues for business-critical systems can neither be predicted nor be mitigated due to a lack of understanding of the internal state of the application and its dependencies during operation.

Solution

Evaluation and implementation of AI-driven Observability use-cases (E.g., Anomaly detection) to improve the Observability capabilities for critical applications.

Benefits

Increased transparency for reliability issues and their causes. Ability to predict key-system problems or failures and increased capability to measure Service Level Objectives.

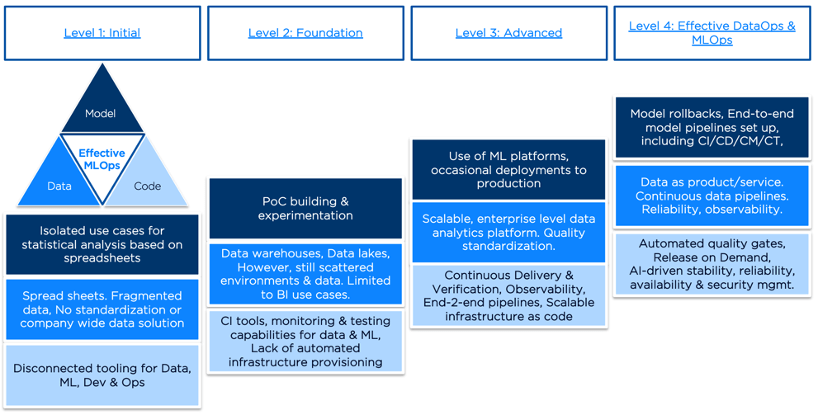

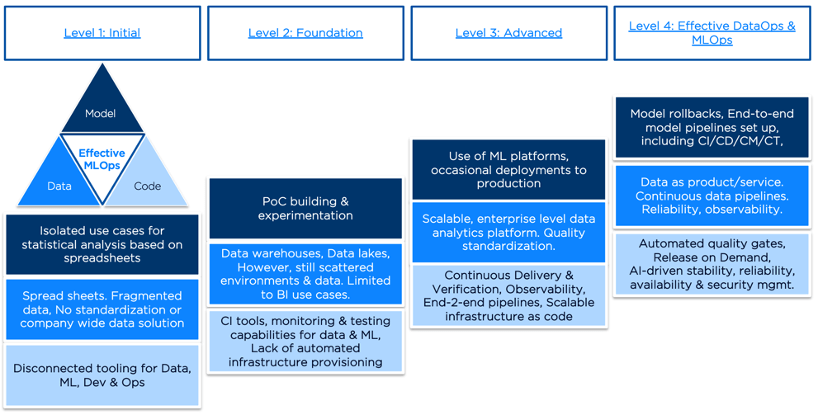

Data Analytics Platform Maturity Assessment for Medium-sized bank with international operations and clients

data analytics maturity assessment cloud transformation mlops devopsProblem

The data analytics foundations were set around an enterprise data hub that collects data from SAP (ERP) & Avaloq (core banking platform). Capabilities were limited to structured data storage and data delivery for business intelligence (BI) use cases.

Solution

Maturity and requirements assessment across different dimensions: Data, ML models, code and infrastructure. Mapping of capability improvement suggestions to parallel DevOps and cloud transformation initiatives.

Benefits

Target picture, roadmap, and work packages for a central, scalable, and integrated data analytics platform including capabilities for advanced analytics (e.g. machine learning, AI), continuous delivery, reliability, and observability.

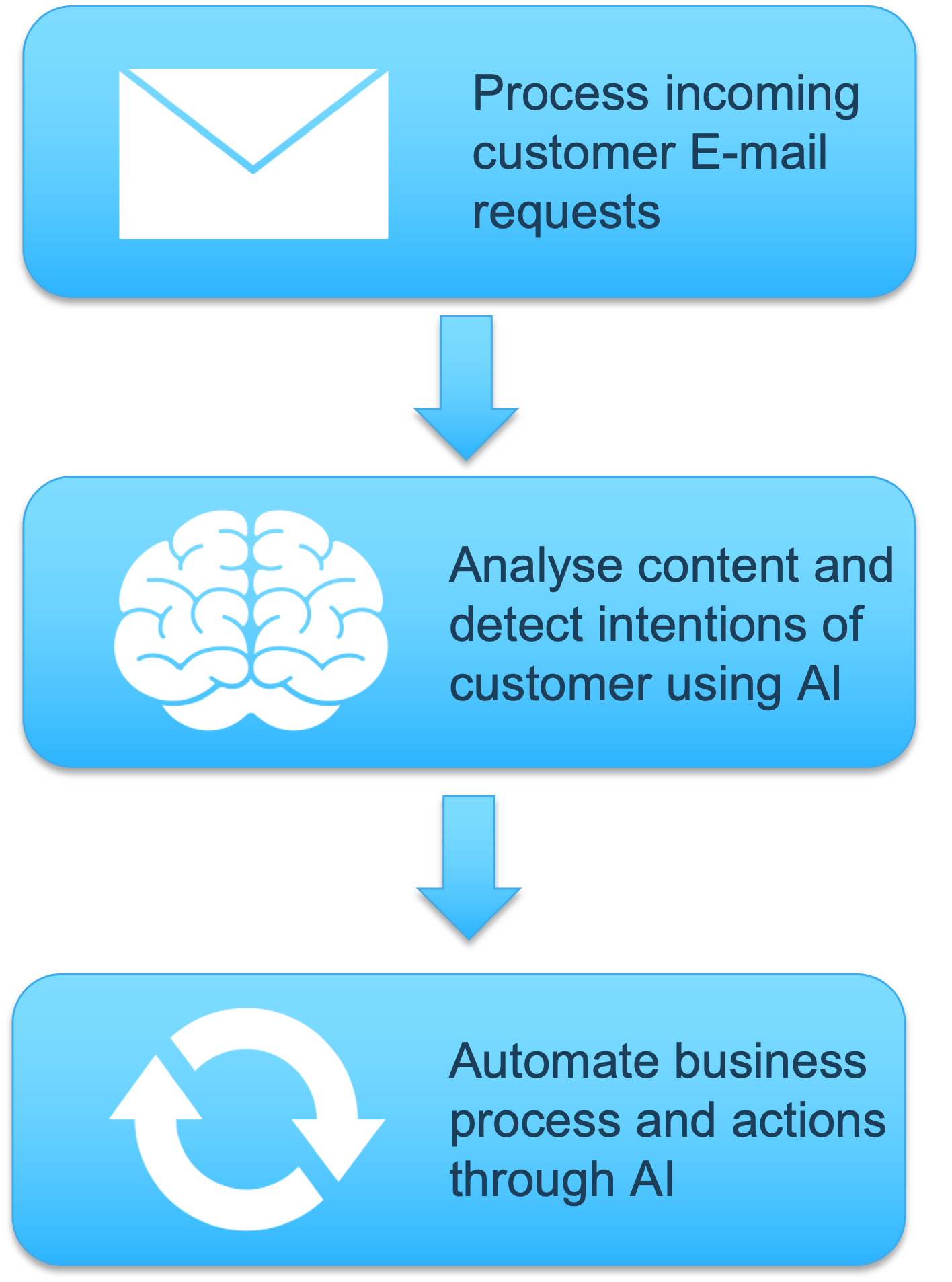

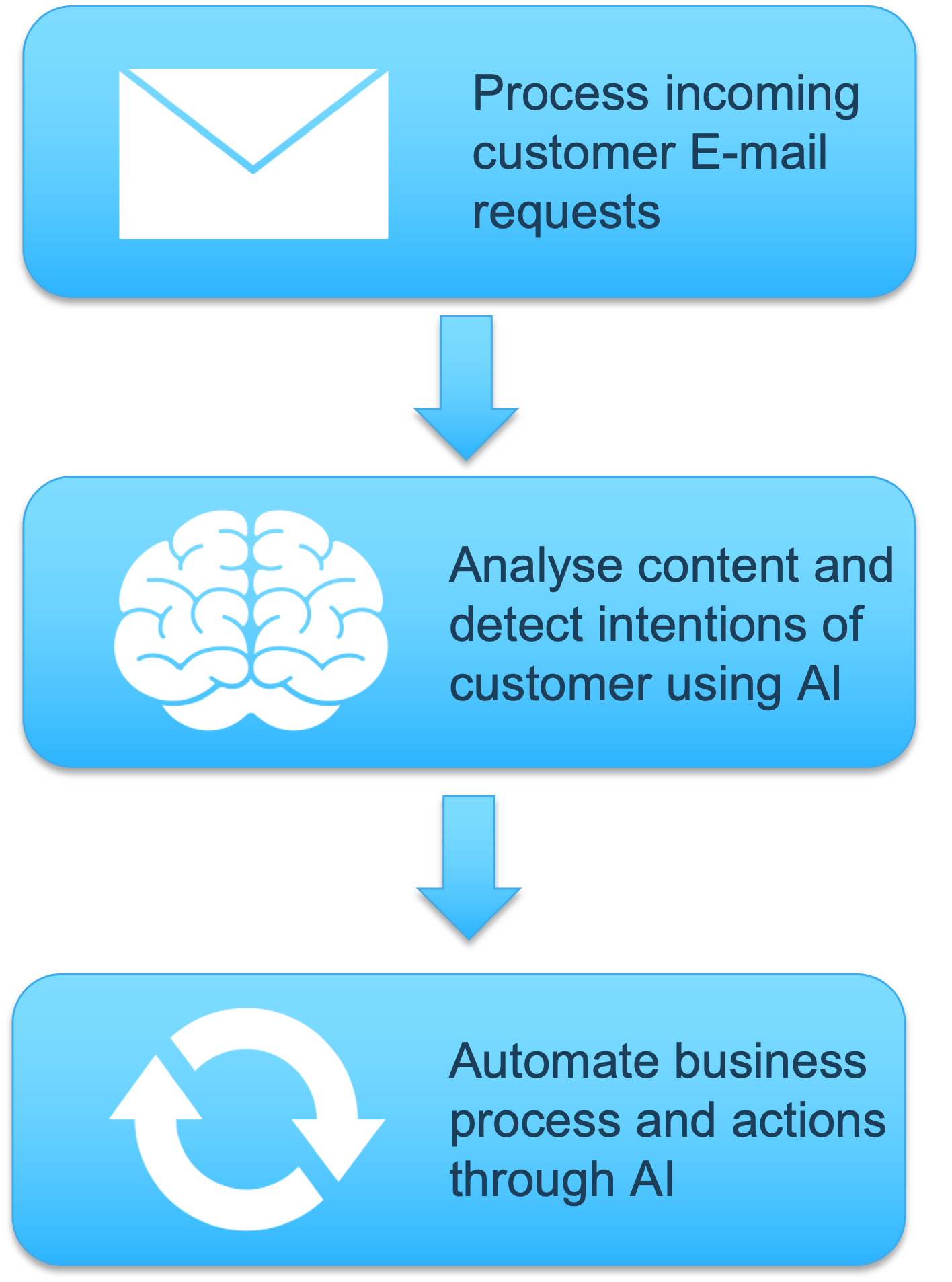

Customer Service E-Mail Automation

real estate data analytics automation neural network custom integrationContext

Dealing with tenants requests is one of the core challenges of the real estate industry. The interaction is time consuming as it involves managing information flows between internal systems, such as a companies CRM, employees and external sources, such as tenants and contractors. At the same time it is an absolute necessity to maintain a good reputation as a service provider. Since the communication is time consuming and often involves tedious tasks, it is well suited for automation. Consider a medium sized real estate company with a handfull of administrative stuff. Their daily business consists amongst other things of interacting with customers and organizing tasks depending on their requests.

Solution

A bot has been rolled out to the customers IT environment, which is capable of parsing incoming E-mails, detecting entities and adding the information found into the CRM automatically.

Benefits

As a direct result of the E-mail automation, the employees had more time for relevant tasks and could focus more on what is important. Additionally, more requests could be processed in the same time

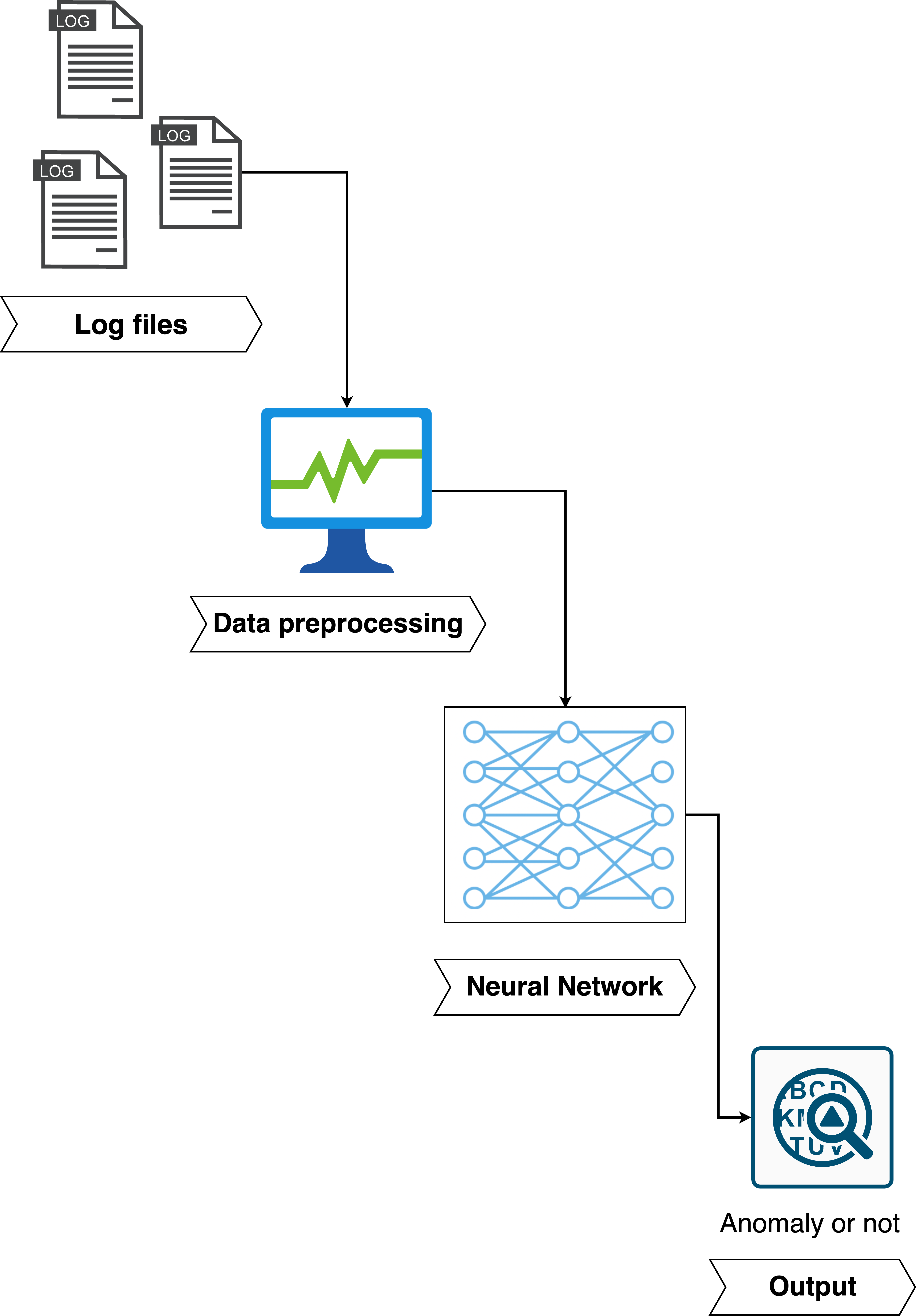

Log Anomaly Detection in Large-scale Systems & Computer Networks

anomaly detection deep learning model development large scale systemsProblem

Considering the huge demand of monitoring and controlling systems’ performances, it became a necessity for companies to detect events that deviate from the average data trends. Hence, anomaly detection can comprehensively enhance the stability and robustness of large-scale systems and computer networks.

Solution

The project involved utilizing machine learning and deep learning techniques to detect and isolate anomalies. It required a solid understanding of various concepts within these areas and their potential business applications in computer networks.

Benefits

Performing anomaly detection helps to identify abnormal behavior in the system, which can indicate potential threats or failures, enabling timely intervention to prevent or mitigate damage. It utilizes machine learning and deep learning techniques to automatically model the data's behavior, learn trends and periodicity, and identify anomalies.

Data Analysis for Capital Reporting Platform Issues

data analysis machine learning reporting issues monitoring alertingProblem

The integration of a crucial capital reporting system posed performance challenges, endangering its successful implementation. This resulted in the system failing to meet important regulatory requirements related to its performance and stability.

Solution

A customized data analysis approach has been utilized to identify jobs that are taking longer to execute. Performance testing has been then established, and proactive monitoring and alerting were implemented.

Benefits

By identifying the critical path, troubleshooting efforts were focused on the most impactful areas to improve performance and stability issues. Additionally, the flow analysis was adjusted at a high level, and monitoring was implemented to facilitate in-depth analysis.

Observability for Big Data for Large Financial Institution

observability monitoring big data advanced analytics data managementProblem

Missing single source of truth for implementation of advanced analytical Observability use cases such as anomaly detection of key systems.

Solution

Design and implementation of centralized Big Data solution, on-boarding of various data sources and preparing data for analytical use-cases.

Benefits

De-siloed data management leading to a single source of truth for Observability data. Building the foundation for experimentation and implementation of use-cases for increased automation within the context of Monitoring and Observability.

Machine Learning for Observability for Large Financial Institution

observability monitoring machine learning service level objectives reliability engineeringProblem

Reliability issues for business-critical systems can neither be predicted nor be mitigated due to a lack of understanding of the internal state of the application and its dependencies during operation.

Solution

Evaluation and implementation of AI-driven Observability use-cases (E.g., Anomaly detection) to improve the Observability capabilities for critical applications.

Benefits

Increased transparency for reliability issues and their causes. Ability to predict key-system problems or failures and increased capability to measure Service Level Objectives.

Customer Service E-Mail Automation

real estate data analytics automation neural network custom integrationContext

Dealing with tenants requests is one of the core challenges of the real estate industry. The interaction is time consuming as it involves managing information flows between internal systems, such as a companies CRM, employees and external sources, such as tenants and contractors. At the same time it is an absolute necessity to maintain a good reputation as a service provider. Since the communication is time consuming and often involves tedious tasks, it is well suited for automation. Consider a medium sized real estate company with a handfull of administrative stuff. Their daily business consists amongst other things of interacting with customers and organizing tasks depending on their requests.

Solution

A bot has been rolled out to the customers IT environment, which is capable of parsing incoming E-mails, detecting entities and adding the information found into the CRM automatically.

Benefits

As a direct result of the E-mail automation, the employees had more time for relevant tasks and could focus more on what is important. Additionally, more requests could be processed in the same time

Sophisticated Recommender System for E-Commerce

recommendation system deep learning model development streamlineProblem

Wholesale company spending a lot of time manually generating recommendations for the 60’000 products in their store. Aiming to automate the process while requiring that correct recommendations are made to their customers.

Solution

Extensive analysis of data was conducted, and a machine learning algorithm was developed utilizing associative rule mining to generate recommendations. Various validations were applied to ensure the credibility of the recommendations. Collaborative filtering could not be applied due to limitations within the ERP system.

Benefits

The simplification of the recommendation process by companies has resulted in more products being recommended to customers, thereby enhancing their shopping experience and streamlining the order process on e-commerce platforms.

End-to-end MLOps implementation for a fitness app and real-time body movement recognition

mlops body gesture recognition model development cloud computingProblem

Machine Learning poses a significant challenge as many teams lack the necessary tools and processes to quantify the adverse impact of technical debt on their production systems. Operating, maintaining, and enhancing machine learning projects is a complex undertaking. To unlock the full potential and derive value from machine learning in production, there is a need for an end-to-end process to develop and operate machine learning applications.

Solution

We utilized our Effective MLOps methodology on a use case involving human body gesture recognition, which involved creating a mobile application that interfaces with a heart rate monitoring device, integrates with a machine learning model, and leverages a data pipeline. To achieve this, we built an MLOps pipeline using a combination of AWS SageMaker, Databricks, and open-source tools.

Benefits

We achieved the successful implementation of real-time gesture prediction utilizing the mobile application and machine learning model. This was made possible by incorporating a robust data pipeline and utilizing the four Cs of MLOps, which are Continuous Integration, Continuous Deployment, Continuous Monitoring, and Continuous Training.

Data Analytics Platform Maturity Assessment for Medium-sized bank with international operations and clients

data analytics maturity assessment cloud transformation mlops devopsProblem

The data analytics foundations were set around an enterprise data hub that collects data from SAP (ERP) & Avaloq (core banking platform). Capabilities were limited to structured data storage and data delivery for business intelligence (BI) use cases.

Solution

Maturity and requirements assessment across different dimensions: Data, ML models, code and infrastructure. Mapping of capability improvement suggestions to parallel DevOps and cloud transformation initiatives.

Benefits

Target picture, roadmap, and work packages for a central, scalable, and integrated data analytics platform including capabilities for advanced analytics (e.g. machine learning, AI), continuous delivery, reliability, and observability.

Real-time Action Detection on Video Streams

action detection video streaming object detection computer vision deep learningProblem

Video analytics company aiming to detect actions from live video streams for analytical insights.

Solution

Utilizing, adapting and implementing deep learning approaches to automatically detect actions within a video stream of a crowd.

Benefits

Automated action detection for crowds and integration of results into analytical platform for further behavioral data analysis.

Webinar on Data Science for Life Sciences

data science life science data proliferation computer vision health pharmaProblem

Data is growing at an exponential rate within life science companies where professionals are facing tremendous challenges to transform data into business value. Henceforth, many life science companies have to increase their knowledge and set the best strategies for data analyses with the objective to maintain their competitiveness and seek new business opportunities.

Solution

Two-hours interactive webinar with professionals looking for reconversion to go through the odyssey of data science with its wide range of analytics tools used in life science related fields. Introduction of basic terminologies and concepts of Data Science, Machine Learning and Artificial Intelligence, as well as their business applications especially in health and pharma related fields.

Benefits

Discovery of methods and tools to extract meaningful insights from raw data in life science to solve complex, data-rich business and research problems. Moreover, another benefit from this webinar for the attendees is understanding of key principles, benefits and limitations behind AI & ML, as well as the exposure to specific health and pharma use cases enabling participants to see the potential applications in their own context.

Didn't find your industry?

No Problem! Reach out and let's discuss how we can help you.

Address

Nauenstrasse 634052 Basel

Switzerland